GitLab CI/CD vs. GitHub Actions: A Comprehensive Comparison

A complete comparison of GitLab CI/CD and GitHub Actions

CI/CD is the cornerstone of every software project. Having an effective CI/CD strategy can make or break a project. It determines how fast you are shipping new features to customers, how quickly you can fix issues that will inevitably surface after a deployment, the speed at which your teams can iterate, and whether it makes your engineers’ lives easier or miserable. It makes sense to keep these systems close to the code because these systems are shipping that code. Git is ubiquitous throughout the industry for managing your codebase, so it was a no-brainer that the leaders in this space, GitLab and GitHub, created their own CI/CD workflow engines. These systems, GitLab CI/CD and GitHub Actions, have been gaining a lot of adoption in enterprise software. Each engine works the same on the surface; however, we believe that one of these engines is superior, and it’s why we tend to nudge our clients towards that solution where it makes sense for their organization. I am going to break down how these systems work, which one we think is the better solution, and why we have built our own product around it.

The Systems

Let’s take a look at how these systems behave from a high level before we start to differentiate the two. Each system is a workflow engine that runs your CI/CD pipelines defined in YAML files. A pipeline is a DAG that contains the steps for building, testing, and deploying your source code. The tasks that make up these pipelines are called jobs. Each job executes a specific task or group of related tasks and moves on to the next task until the pipeline completes or fails. Tasks can be run in parallel or sequentially. These jobs are executed on runners. Runners are servers that, you guessed it, run your jobs. Typically, they are Linux VMs or containers configured to execute the pipeline tasks. Both GitLab and GitHub have adopted this approach, and even the terms used to describe the CI/CD pipeline are mostly the same between these systems. We will start to see a divergence between them as we look at building these pipelines, how they are structured in terms of dependencies and accessing common code for reuse, how these pipelines are presented to the end-user, and how one can go about debugging a failed pipeline.

Code Reuse

As engineers, we are naturally lazy. It’s okay to own that. A large part of our job is to automate repeatable tasks. Why spend the mental cycles on trying to build the same thing, especially if it is a non-differentiator of what we are actually building and want to deploy? Naturally, there are going to be reusable tasks scattered throughout an organization’s CI/CD pipelines. Think dependency management, container scanning, security tools, deployment flows, etc. More importantly, code reuse allows us to enforce standardization around these types of tasks. Let’s discuss how each of these systems enables us to reuse what we have built.

GitLab

When writing a pipeline in GitLab, you have a few different ways to reuse jobs or attributes of those jobs. The first is what they call a hidden job. A hidden job can often be used as what I think of as a “base” job. This is a task that is fundamental to a pipeline, say setting up authentication for your cloud provider (AWS, Azure, GCP), or containerizing your application. You can use the ‘extends’ keyword to reuse configuration sections of those hidden jobs. The ‘extends’ keyword behaves similarly to YAML anchors. If you are familiar with anchors, you can use those natively as well with your GitLab pipelines. We can also reach for the ‘!reference’ custom YAML tag to make a selection of keyword-based configuration and reuse it throughout our pipelines. This last one is your scalpel that lets you use just what you need. Finally, we tie this all together with the ‘include’ keyword. This gives us the ability of making our pipelines composable by allowing us to split out our base jobs into templates for reuse. Let me show you a real world example of all of these tools in practice. We use them to build out our Konfig by Real Kinetic CI pipelines. Here is the source code that we have open-sourced if you want to hop right into it.

We have a template for running gcloud tasks to interact with GCP. Below is our job to login with gcloud using a hidden job and an anchor. In the second job we reference the login job using the ‘!reference’ tag

#gcloud.gitlab-ci.yml

.gcloud_login: &gcloud_login

before_script:

- echo ${GITLAB_OIDC_TOKEN} > .ci_job_jwt_file

- |

gcloud iam workload-identity-pools create-cred-config $GCP_IDENTITY_PROVIDER \

- service-account=$GCP_SA_EMAIL \

- output-file=.gcp_temp_cred.json \

- credential-source-file=.ci_job_jwt_file

- gcloud auth login - update-adc - cred-file=`pwd`/.gcp_temp_cred.json

- gcloud config set account $GCP_SA_EMAIL

.gcloud_configure_target: &gcloud_configure_target

before_script:

- !reference [.gcloud_login, before_script]

- gcloud config set project $GCP_PROJECTNow, we can use the ‘include’ tag with the ‘extends’ keyword in another template to decouple our jobs from each other. This makes maintaining and debugging much simpler as the pipeline grows in complexity.

Looking at how we build Dataflow jobs in our Konfig template file we can take a deeper look in the below snippet. We ‘include’ the gcloud jobs and now we can reference them as we need. Again, only using the commands we need from the ‘before_script’.

#konfig.gitlab-ci.yml

include:

- "/templates/gcloud.gitlab-ci.yml"

.deploy-dataflow-flextemplate:

before_script:

- !reference [.gcloud_configure_target, before_script]

script:

- echo Creating flex-template spec fileIt all comes together in our Konfig Dataflow template where we can break up the pipeline into stages and take advantage of the ‘extends’ keyword. This final template can now just focus on deploying the pieces it needs and abstracting away the pieces that it should not know about such as containerization and authorization.

# konfig-dataflow/template.yml

include:

- "/templates/containerize.gitlab-ci.yml"

- "/templates/konfig.gitlab-ci.yml"

deploy:dataflow:dev:

stage: dev

needs: [deploy:workload:dev]

extends: .deploy-dataflow-flextemplate

variables:

GCP_PROJECT: $GCP_PROJECT_DEV

GCS_BUCKET: $GCS_BUCKET_DEV

METADTA_FILE_PATH: $METADATA_FILE_PATHWhat is great about GitLab’s ‘include’ feature is that it works across repositories. This means you can build very nicely structured, easy-to-read templates that can be reused. Magically, you can even include from git refs — so you can test changes before merging them into your templates. GitLab documents a range of common patterns which are very helpful. This also allows you to trivially build pipelines within one project, then refactor common patterns out into a templates repository.

For more complex tasks, you can build a purpose-built container. For operations where leveraging programming languages such as Python is helpful, we’ll often build a minimal container with only the Python script. This allows us access to any programming language to perform complex tasks. The behavior of GitLab’s CI system makes this completely seamless. These containers can live within a GitLab container registry that your projects can access — public or private.

You can also directly use GitHub actions as steps, but we find the native GitLab capabilities superior if you’re not migrating existing large, complex pipelines.

GitHub

GitHub Actions gives us two main ways for reusing pieces of code for your pipelines: actions themselves and reusable workflows. Workflows in GitHub Actions are similar to templates in GitLab CI/CD, sort of. A workflow is a collection of jobs. A job is a collection of steps, with a step being a group of shell scripts or actions. You can create your own custom actions or use actions that someone else has written and maintains. There are currently three types of actions: Docker container, JavaScript, and composite. The most prevalent type of action you will run into in the wild is JavaScript. To implement an action in your workflow, you declare it in the step where you want to use it, as follows.

steps:

- uses: actions/javascript-action@v1Sharing Actions between repositories within an organization is possible, but more involved to set up. In practice it is much more challenging to manage and build a library of reusable logic.

The use of this type of system requires that you keep your actions up-to-date or pin your action to a version that works or that your organization requires due to security reasons. This causes more overhead when writing your pipeline and can make them fragile over time — especially because the uses clauses tend to proliferate across many repositories and workflows. I have spent countless hours debugging actions that I do not own, hamstringing release cycles. Hopefully, you own the actions that you are implementing in your pipeline; however, in practice, you always end up using third-party actions. This is not a bad thing per se, as mentioned above: why reinvent the wheel? Because of GitHub Actions’ architecture, it lacks certain features and relies heavily on the open-source community to create and maintain “common” actions. As an example, GitHub Actions does not natively support YAML anchors (the community is still waiting: Issue #1182, Issue #4501 ). In order to use a basic function such as an anchor you need to use other actions or workarounds. To combat the lack of code reusability, GitHub created reusable workflows and composite actions. When calling a workflow from another workflow the entire called workflow is used. This can work well if you have complete workflows that can be adopted for your use case. If you do not need or want a whole workflow you can use composite actions. Composite actions allow you to combine multiple steps into a single action. This gets us closer to GitLab’s approach however, you still do not have the flexibility and ease of implementation as the use of the ‘includes’, ‘extends’ and ‘!reference’ tags.

Debugging

One can argue that with thoughtful design and strong ownership, code reusability is essentially solved in both systems. When it comes to pipeline debugging, GitLab hands down has the better toolset. I would suggest comparing the two; however, most of the features we are going to look at are non-existent in GitHub Actions.

GitLab

With GitLab, we get a Pipeline Editor. This is a one-stop shop for linting, validating, viewing your workflow YAML, and even selecting which version of a templated pipeline to use. Having come from GitHub Actions, after seeing this suite of tools, I wondered why something like this has not been implemented by GitHub. It really is a game-changer and saves tons of trial-and-error commits while building and debugging pipelines. Let’s go over what we get out of the box.

Edit

We get the ability to edit a pipeline. In the example above, we had created a template for our pipelines. Here is how we use an entire pipeline in another repository. We can choose the tag or branch name of the pipeline version we want to use. Below, you can see I want to use the konfig-dataflow template from the main branch. While I was building out this template, I was able to switch to a @feature-branch to test and validate my changes. This alone has saved me hours of time and removed the potential for breaking downstream customers. You can pass in variables as inputs to your pipeline here, which is another powerful tool we leverage for our products.

Validating

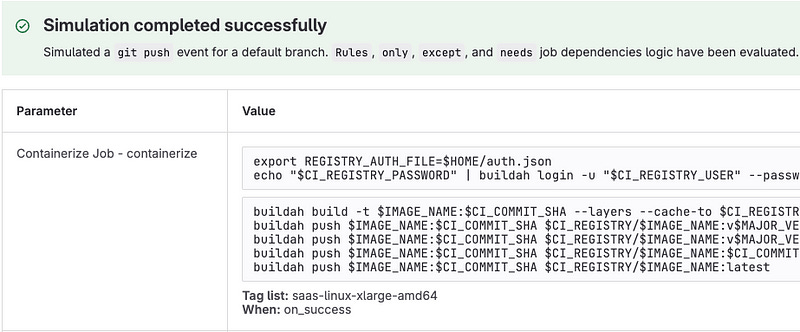

Once you are ready to run your pipeline after you have made changes, you can validate your YAML code. Again, this is one of those features that you feel should come out of the box with every CI/CD system. When a successful validation completes, you get a readout of the code your jobs will run. If you have any failures such as syntax errors or dependency logic, it will give you a stack trace and tell you where the issue lies.

Full Configuration

To complete the review of the system, you can view the full configuration of the YAML file after it has been compiled (includes, anchors, and references expanded!). Another nice feature that allows you to see how your merges worked if you have complex pipelines that include or reference other templates or contain many nested jobs. This feature also helps you see where pipelines are badly architected. Bad structure will be huge with lots of rules to filter steps — if your pipelines look like that, you’re in for a painful time as you try to iterate because the blast-radius is “everything.”

GitHub

With GitHub, you do not get a pipeline editor. However, it would be unfair to say that you can’t get approximations to these features with GitHub Actions, but you need to reach for third-party solutions. Granted, as of the writing of this article, there is no way to my knowledge that you can do the equivalent of editing a pipeline as GitLab can.

Validating

GitHub offers an Actions toolkit that should make testing Actions easier. I never implemented it, so I cannot speak to its usability. Looking through the documentation and what others have written online, there is obviously some overhead, including writing custom code to test your pipeline. This will be a non-starter for almost everyone and they invariably find themselves making tweaks to their workflow YAML files and see what breaks.

Full Configuration

GitHub Actions does give you the ability to view your workflow file after the pipeline has been created and run. If you have long build times or if your issue is at the end of a pipeline, these are sunk hours. The good news is you will get to catch up on all your Slack and email messages!

Configuration and Organization

When working at an enterprise level, with many development teams across many business units, configuring and organizing your CI/CD toolkit is what can make or break your project deadlines. An important aspect of this organization is how your project structure is handled. At first glance, GitLab and GitHub seem very similar in how they structure project hierarchies. When someone sends you a link to their repository, in both systems you are presented with a list of directories and files, plus a README.md. However, when you start to implement a project, you begin to see the significant differences between the two. Let’s take a look at how project structure is handled in both GitLab and GitHub and how it lends itself to well designed systems.

GitLab

GitLab manages projects by groups and subgroups. Typically, for larger businesses, you will see an organization as a top-level group and have subgroups to differentiate between business units, or for housing internal and external content. Furthermore, each project and group has its own visibility: either public, internal, or private.

GitLab leverages this controlled tier system with its CI/CD platform. You can set CI variables at different levels of the hierarchy, which are inherited by lower tiers. This way, you can set environment variables or secrets at the group level and have them inherited for your projects. Similarly, we can manage user permissioning with this hierarchy. This feature is one of those things that, in retrospect, seems obvious but could not have been implemented as cleanly if a proper hierarchical structure had not been built into the system from the outset. We have found this feature extremely useful when integrating our own product with GCP, which uses a similar hierarchy. You can view more details from our writing on the topic.

GitHub

GitHub uses organizations and repositories as its primary structure. It offers the ability to share secrets and variables from an organization to its repositories. However, for significantly larger organizations, this process can become messy without strong communication and a dedicated team to manage access. Typically, organizations set up teams for management of projects and repositories. In large enough organizations, this approach tends to degenerate into a swamp of repositories that rely on naming conventions to maintain some semblance of order. At this point, the team tasked with managing access often has little to no context about what each repository needs. You can imagine inevitable headaches that ensue.

The Choice

It’s obvious, right? I didn’t write this post to just criticize GitHub or Actions. They are clearly doing alright, with an estimated 78.47% market share and a lot of businesses have adopted Actions. However, when it comes to deciding on a CI/CD system and you have to choose between the two, GitLab has the better CI/CD offering. We at Real Kinetic are not sponsored by GitLab nor do we get paid by them. We are a group of engineers who like to use the best tools for the job. In fact, we used to recommend GitHub to clients because it was the better product. However, GitLab has evolved a lot over the last few years. That being said, it is not a perfect product. GitLab generally has a lot more features than GitHub, but the quality and investment in some of the features can vary considerably. They seem to be willing to roll out new features but kill or deprecate them quickly. This also means that GitLab’s UI can be quite complex, which can cause the UI to be slower. These are some trade-offs you will have to measure when deciding whether to migrate your organization to GitLab if you are on another system.

Leveraging the above-mentioned patterns and design architectures, we have been able to ship code for our customers fast and efficiently, where they were bogged down before. We have built our own product, Konfig by Real Kinetic, around GitLab, which allows us to scale our business by migrating workloads securely to the cloud.

If you found this article interesting, please subscribe to our substack for more content. If this article upset you, let me know why. I would love to have a conversation about it. If you are looking for some help with your CI/CD story or migrating to the cloud please reach out to us!