Shipping Fast with FastAPI and Cloud Run

Since the beginning of time, software engineers have been asked to deliver features at breakneck speeds to keep their businesses ahead of…

Since the beginning of time, software engineers have been asked to deliver features at breakneck speeds to keep their businesses ahead of the competition. You need to ship quickly, but the system must also adhere to common practices in today’s enterprise environment. This means deploying to the cloud in a containerized environment and working with multiple resources. You will need logging, monitoring, and a solid testing strategy. Recently, I faced this challenge and will show you how to quickly and confidently ship a backend API that integrates with Google Cloud, leveraging Firestore and Cloud Run for deployment.

In this post, we’ll build a backend API server using FastAPI, a lightweight framework for building APIs in Python and deploy it to the cloud with Cloud Run. Our server will interact with Google’s NoSQL database offering, Firestore, using their Discovery API and Firestore Client libraries. We are going to get under the hood and look at the source code, break down the Dockerfile to package up our application, use the gcloud CLI to deploy it and finally check it all out in the console. If you aren’t familiar with Cloud Run I will point you to the docs. If you were wondering why Cloud Run, well we at Real Kinetic have some opinions on that and you can check them out here. Maybe you just like building stuff or want to delve into serverless development using this stack, welcome!

If you would like to skip straight to the full codebase head over to our Open-Source repo and check it out in our CodeLab https://gitlab.com/real-kinetic-oss/code-lab/fast-firestore

The Stack

Python >= 3.10

Google account with a project where your services and resources live

The Scenario

We are tasked with spinning up an API that can:

Create a Firestore database with the location, type and name in the request body

Query a Firestore collection for a specific item and return the item if it exists.

Create or update a document based on the body of the request.

We need to build this quickly using FastAPI, containerize it, and deploy it on Cloud Run.

Normally, you wouldn’t create Firestore databases from application code. This is typically done using an IaC tool like Config Connector. However, Config Connector doesn’t currently support the creation of Firestore databases. This example demonstrates how to create a database and provides an opportunity to utilize the Discovery API.

The Code

First, we need to write our Python code to define our application, our paths and path handler functions.

We’ll start with the endpoint to create our database. For this endpoint, I’m going to use the discovery client for interacting with the Firestore REST APIs. I want to demonstrate that there are other ways to interact with Google resources outside of the client libraries. Sometimes, the client libraries lack documentation or functionality, putting you in a situation where you might want to create your own client libraries.

When working with the discovery service, you need to create a resource for interacting with a service. In this case, Firstore. At the time of writing, Cloud Firestore API v1 is the latest version of the API. You can reference the Firestore API docs here. We build our API calls based on the definition in the documentation for the “get databases” API call. You can see and test the call signature here. Notice in the Python code, we are replicating that call. The same goes for the “databases create” call. These APIs are described in the discovery document, which can be used to craft your calls.

# /src/main.py

from fastapi import FastAPI, status

from pydantic import BaseModel, Field

from googleapiclient import discovery

from googleapiclient.errors import HttpError

# FastAPI uses uvicorn as the ASGI web server

import uvicorn

# Pydantic model for defining the request body

# Include sane defaults where it makes sense for database and location

class PostRequest(BaseModel):

project: str = Field(default="my-project")

location: str = Field(default="nam5")

type: str = Field(default="FIRESTORE_NATIVE")

database: str = Field(default="(default)")

# A very nice feature of FastAPI is generated OpenAPI docs out of the box.

app = FastAPI(title="Serivce API", docs_url="/api/docs")

# Create a discovery client for interacting with the Firestore API

client = discovery.build("firestore", "v1")

@app.post(

"/database",

status_code=status.HTTP_200_OK,

description="Creates a new firestoe database",

)

async def create_db(request: PostRequest) -> dict:

db_name = f"projects/{request.project}/databases/{request.database}"

firestore_db = {}

# Check if database already exists

try:

firestore_db = client.projects().databases().get(name=db_name).execute()

except HttpError as error:

if json.loads(error.content).get("error", {}).get("status") == "NOT_FOUND":

print(f"Creating new db {db_name}")

else:

raise error

if firestore_db:

return firestore_db

# Create the db

try:

operation = (

client.projects()

.databases()

.create(

parent=f"projects/{request.project}",

databaseId=request.database,

body={"type": request.type, "location_id": request.location},

)

.execute()

)

except HttpError as error:

raise error

return operation.get("response", {})A few things to note when working with Firestore. The Cloud Run service account will need the Cloud Datastore Owner role to create databases. We also need to be mindful of Security Rules so that your database is not exposed to the world. By default, all mobile and web client reads and writes are denied, so only an IAM principal with the correct role will be able to access the database.

By far, the create database endpoint will be our most complex endpoint. The next two endpoints will leverage the client libraries directly and demonstrate some simple queries against the database.

Next, we will create our endpoint to get a specific item from our database collection. Here, we are using the Firestore client library. This snippet exists under our first POST endpoint we defined above. We will return the item if it exists and a 404 if it is not found. Notice the use of the status module to return the appropriate status.

# /src/main.py

from fastapi import FastAPI, status

from google.cloud import firestore # Firestore client module

# FastAPI uses uvicorn as the ASGI web server

import uvicorn

# Create our client

firestore_ = firestore.Client()

#

# FastAPI app and POST endpoint definitions are located here

#

@app.get(

"/items/{item_id}",

status_code=status.HTTP_200_OK,

description="Get an item by item_id."

)

async def get_item(item_id: str) -> dict:

doc_ref = firestore_.collection("my-collection").document(item_id)

doc = doc_ref.get().to_dict()

if not doc:

raise HTTPException(

status_code=status.HTTP_404_NOT_FOUND, detail=f"The item {item_id} does not exist."

)

return docNow, let’s move on to our last endpoint. This endpoint will either create a new document or update an existing one based on the request body passed to the REST endpoint. If a document already exists with the specified fields, the endpoint will return a 200 OK. If a new document is created or if an existing document is updated, it will return a 201, indicating an update or insert action occurred.

Notice the use of the Response object to change the default status. Since we can have more than one successful status, this is a clean way to let the end-user know what has happened after the API was called.

# /src/main.py

from fastapi import FastAPI, Response, status

# FastAPI uses uvicorn as the ASGI web server

import uvicorn

# Pydantic model representing the request body.

class PutRequest(BaseModel):

description: str

rating: str

#

# FastAPI app, POST and GET endpoints definitions are located here

#

@app.put(

"/items/{item_id}",

status_code=status.HTTP_201_CREATED,

description="Create or update item.",

)

def upsert_item(item_id: str, request: PutRequest, response: Response) -> dict:

doc_ref = firestore_.collection("my-collection").document(item_id)

doc = doc_ref.get().to_dict()

if doc == request.model_dump():

response.status_code = status.HTTP_200_OK

return request.model_dump()

doc_ref.set(request.model_dump())

updated_doc = doc_ref.get().to_dict()

return updated_doc

# Run our uvicorn server on app startup

if __name__ == "__main__":

uvicorn.run("src.main:app", host="0.0.0.0", port=8080)The Deployment

That is it for our Python server code! As you can see we can create a pretty complete set of endpoints for interacting with Firestore seamlessly. I would challenge you to write a delete endpoint for completeness if you are feeling up to it.

I will drop the `requirements.txt` file listing our dependencies. Following that, you’ll find the Dockerfile that defines the container that will be deployed to the cloud.

# requirements.txt

fastapi==0.110.3

google-api-core==2.19.0

google-cloud-artifact-registry==1.11.3

google-cloud-firestore==2.16.0

google-api-python-client

uvicorn==0.29.0tA quick note on the project directory structure: our source code lives in the `/src` directory. Our Python code is located in `main.py` which informs our `CMD` instruction in our Dockerfile. The Dockerfile itself is at the root of the repo.

# Dockerfile

# Official Python image

FROM python:3.12-alpine

# Install build dependencies

RUN apk add --no-cache gcc g++ musl-dev libffi-dev openssl-dev

COPY . /src

WORKDIR /src

# Install Python dependencies

RUN pip install -r requirements.txt

EXPOSE 8080

# Command to run when the container spins up.

CMD ["python", "-m", "src.main"]Our Dockerfile is ready to ship! It is time to deploy our application to Google Cloud Run.

Before we set sail, make sure you have a project in Google Cloud where you want our Cloud Run app to be located along with your Firestore database.

Make sure you have your project set in the gcloud CLI:

$ gcloud config set project <YOUR-PROJECT>Let’s enable the Artifact Registry API:

$ gcloud services enable artifactregistry.googleapis.comCreate a Docker Repo in Artifact Registry:

$ gcloud artifacts repositories create medium-blog-repo \

- repository-format=docker \

- location=us-central1You should see the repo in the Google Cloud Console under Artifact Registry > Repositories.

Authenticate Docker to Artifact registry:

$ gcloud auth configure-docker us-central1-docker.pkg.devNow, let’s build our Docker image:

$ docker build -t us-central1-docker.pkg.dev/<YOUR-PROJECT-ID>/medium-blog-repo/medium-blog-api .Note: If you are developing on non-AMD64 or x86_64 architecture, like the Mac M-series machines, you’ll need to specify the architecture during build using the Docker buildx plugin:

$ docker buildx build --platform linux/amd64 -t us-central1-docker.pkg.dev/<YOUR-PROJECT-ID>/medium-blog-repo/medium-blog-api .Push the Docker image to the Artifact Registry:

$ docker push us-central1-docker.pkg.dev/<YOU-PROJECT-ID>/medium-blog-repo/medium-blog-apiOur Docker image is now hosted in Artifact Registry, sitting snugly in the medium-blog-repo we created earlier.

It’s time to deploy the Docker Image to Cloud Run:

$ gcloud run deploy medium-blog-api \

--image us-central1-docker.pkg.dev/<YOUR-PROJECT-ID>/medium-blog-repo/medium-blog-api \

--region us-central1 \

--allow-unauthenticatedThe Finale

With our server successfully deployed, it’s time to put it to the test!

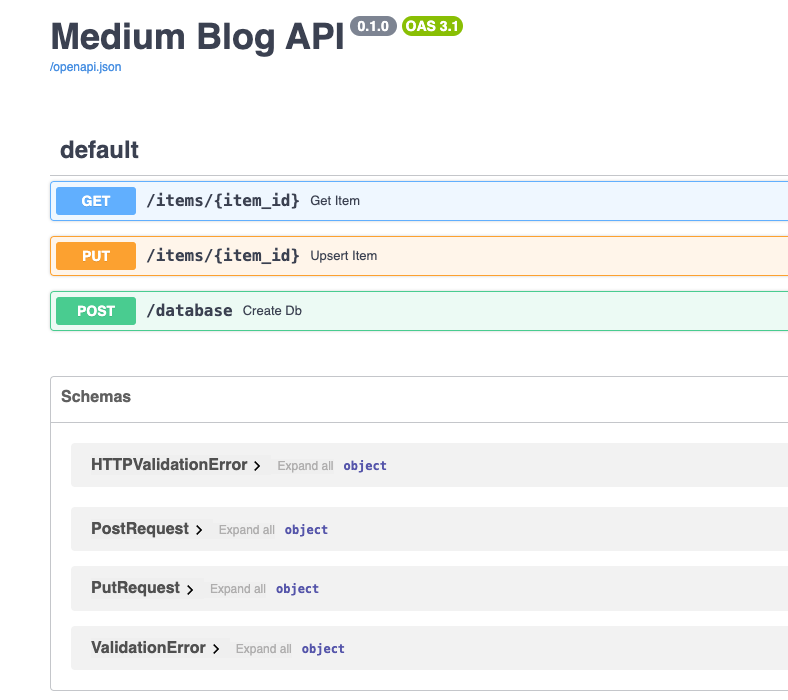

There are a few ways we can ensure our code is running smoothly. Firstly, upon successful deployment, the CLI will provide us with the Service URL. Navigating to this URL and appending /api/docs to the end will bring up our OpenAPI docs, where we can see our defined endpoints in all their glory.

Additionally, we can head over to the Cloud Run console to get a comprehensive view of our service. From here, we can dive into Metrics, Logs, Revisions, and even inspect the service specification YAML. It’s a fantastic setup for managing and monitoring our service, providing all the features we’d expect for an enterprise-grade solution.

Let’s kick off our testing journey by creating a database using our /database endpoint. We can do this directly from our /api/docs page, ensuring we receive a reassuring 200 Status code. Afterward, a quick visit to Firestore confirms our new database is up and running.

Next, let’s populate our database by creating an item using the PUT /items/{item_id} endpoint. By passing in our request body, we can verify that we receive a beautiful 201 response from the server, indicating that our new item has been successfully added.

A quick check in our database reveals our new item. Now, let’s put our update functionality to the test by making some tweaks to our item.

Update the item to make sure that functionality is working.

Last but certainly not least, let’s retrieve our item using the GET endpoint. Passing in the item ID should reward us with a satisfying 200 response, accompanied by the item we created earlier, proudly displayed for all to see.

The Conclusion

Well, folks, we’ve reached the end of our adventure! If you have made it this far, we’ve experienced firsthand the magic that happens when FastAPI meets the robust ecosystem of Google Cloud services.

By tapping into both the discovery and client APIs for Google services, we’ve unlocked a treasure trove of functionality within FastAPI, seamlessly integrating our backend with Google Cloud Firestore. But that’s not all — we’ve taken things to the next level with Google Cloud Run.

I hope you found this article helpful, whether you are interested in backend cloud-native development or currently building a system with any of the technologies in this stack. If you have any questions about migrating or modernizing your tech stack please feel free to reach out to us at Real Kinetic!