Testing Lambda and S3 code with VSCode and Jest

I recently had to build a data processing pipeline in AWS. The client I was working with had established a few ground rules: the…

As always if this article made your life a little easier or allowed you to impress your peers, please be sure to applaud it and share it. If it did not work for you please leave a comment and I will get back to you. This article was not generated by a GPT but by a real life human who wants to interact with you!

I recently had to build a data processing pipeline in AWS. The client I was working with had established a few ground rules: the infrastructure needed to be created and managed using the AWS CDK, the language had to be Typescript, and due to the sensitive nature of the data it had to be tested and validated. The pipeline was an event based system that kicked off a lambda function when an object was written to S3. If you have worked with AWS in any capacity you know how slow and painful the development cycles can be if you try to test your changes in the AWS console. If you are working on a team, and most likely you are, you don’t want to be making changes to the stack at the console and getting angry slack messages from your teammates about who borked dev. I am going to walk through how I was able to speed up my development time by setting up unit tests early to catch annoying bugs and test my data processing code quickly without ever having to mess with the AWS console.

The Stack:

IDE: VSCode

Unit testing framework: Jest

Mock library: aws-sdk-client-mock

Here is the scenario: you have a lambda function that has an event source mapping to an S3 bucket, such that the lambda is triggered on a put event. You want to grab some records from that event object, map them to JSON and process them. You expect those records to contain newline delimited JSON, and there will be one or more records in each event. Your lambda handler code looks something like the following:

export const handler: Handler = async (event: S3Event) => {

const s3Input = event.Records.map(

(record: S3Event) => ({Bucket: record.s3.bucket.name, Key: record.s3.object.key})

);

const records = s3Input.map((input: GetObjectCommandInput) => getRecords(s3Input));

processRecords(records);

}You have read the AWS documentation on S3 events so you know what the structure of the S3Event object is going to be. You grab the bucket name and object key. You create your getRecords function and define it as such:

type Record = {

recordId: number;

status: string;

language: string;

}

export const getRecords = async (input: GetObjectCommandInput): Promise<Record[]> => {

let response: GetObjectCommandOutput;

try {

const command = new GetObjectCommand(input);

response = await s3Client.send(command);

} catch (err) {

console.log(err.message);

throw err;

}

const jsonString = await response.Body.transformToString();

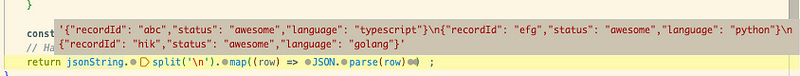

return jsonString.split('\n').map((row) => JSON.parse(row));

}You are using the most recent (at the time of writing) AWS SDK v3 for interacting with your AWS resources. Again, you are a diligent dev and made sure to read AWS’s absolutely amazing and totally straightforward documentation so you know how to work with the response type to get at your much needed data.

So, how do you test this thing? How do you know your JSON parsing is working? Are you getting all of the records from your event? Let me show you how.

First, install jest with whichever package manager you are using for your project. I suggest following the instructions for setting it up with ts-jest. Make sure your jest.config.js file is set up. Here are some docs to follow for that. It should look pretty simple:

/** @type {import('ts-jest').JestConfigWithTsJest} */

module.exports = {

preset: 'ts-jest',

testEnvironment: 'node',

collectCoverage: true

};Update your test script in your package.json to run jest.

"scripts": {

"test": "jest",

"build": "tsc",

}Create a directory and files for your unit tests. I like to create a test directory so my structure looks something like this

Where lambda.ts houses the handler code and test/lambda.test.ts houses the unit tests. It is easy to reason about and a simple standard to implement and keep clean.

Notice in the above structure I have a test.json file. This file is going to hold the data that I expect will come from my S3 put event. For the current scenario as I mentioned above it will be newline delimited rows, each containing a JSON object:

{"recordId": "abc","status": "awesome","language": "typescript"}

{"recordId": "efg","status": "awesome","language": "python"}

{"recordId": "hik","status": "awesome","language": "golang"}import * as fs from 'fs';

import * as path from 'path';

import { mockClient } from 'aws-sdk-client-mock';

import { GetObjectCommand, S3Client } from '@aws-sdk/client-s3';

import { beforeEach, describe, expect, it } from '@jest/globals';

import { sdkStreamMixin } from '@smithy/util-stream';

import * as lambda from '../src/lambda';

describe('test getRecords', () => {

// Create a mock for S3 at the beginning of your describe block.

const s3Mock = mockClient(S3Client);

const s3Input = {

Bucket: 'test-bucket',

Key: 'test.json',

};

// Make sure to reset your mocks before each test to avoid issues.

beforeEach(() => {

s3Mock.reset();

});

it('should read all lines of file', async () => {

// Create a stream from your test file.

const stream = sdkStreamMixin(

fs.createReadStream(path.resolve(__dirname, './test.json'))

);

// Return your test data when calling GetObjectCommand.

s3Mock.on(GetObjectCommand).resolves({

Body: stream,

});

const records = await lambda.getRecords(s3Input);

// Assert that you are reading through all the records.

expect(records).toHaveLength(3);

});

it('should have the correct data in array items', async () => {

const expected = [

{recordId: 'abc', status: 'awesome', language: 'typescript'},

{recordId: 'efg', status: 'awesome', language: 'python'},

{recordId: 'hik', status: 'awesome', language: 'golang'},

];

const stream = sdkStreamMixin(

fs.createReadStream(path.resolve(__dirname, './test.json'))

);

s3Mock.on(GetObjectCommand).resolves({

Body: stream,

});

const records = await lambda.getRecords(s3Input);

// Assert that your JSON parsing is working.

records.forEach((record) => {

expect(record).toHaveProperty('recordId');

expect(record).toHaveProperty('status');

expect(record).toHaveProperty('language');

});

expect(records).toEqual(expect.arrayContaining(expected));

});

it('should throw on S3 error', async () => {

// Sets a failure response returned from S3Client.send().

s3Mock.on(GetObjectCommand).rejects('Failed to get object from S3.');

await expect(lambda.getRecords(s3Input)).rejects.toThrow();

});

});Now, how can we run these tests and actually step through the code? As mentioned before I am using VSCode and its built in debugger. It is trivial to set up and easy to use. From your package.json you can click on the “debug” icon to start your tests. Select test jest.

Set breakpoints in your code by clicking to the left of the line numbers in the editor.

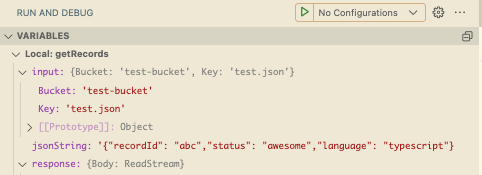

In the run and debug panel on the left side of the editor you will now be able to check the state of your program. This is very helpful in particular to see the value of variables.

You can see here what the value of our jsonString variable is. Pro-Tip: if you hover over the line of code where the execution is stopped you can view the value at that point.

Now, you are no longer guessing at what your code is doing, you can see it! You no longer need to litter your code with console.log statements and furthermore you can deploy your code to AWS and know how it will behave. One last tidbit to give you before wrapping this up. Pass in the –coverage=true flag to your test run to print out a nice coverage report.

If you are looking for assistance on cloud development or if your project is stuck and you’re unsure where to seek guidance, Real Kinetic is here to help! We have decades of experience working in complex systems and delivering projects on time while leveling up the devs around us.